|

| Parameterized route finding in ORBIS |

Our initial steps were these: (a) create an API allowing a few simple RESTful queries of the ORBIS database (orbis.stanford.edu/api), (b) publish an RDF file which maps our URIs for 402 of ORBIS’ 751 sites to Pleiades places, and (c) build a simple dynamic map landing page for the URIs.

Project background

The initial phase of the ORBIS project was completed with a web site launch in May, 2012. Development has largely paused for several months, but will resume in early 2013. The goal of the project is to model Roman communication costs in terms of both time and expense, and to permit scholars and the general public to interact with that model, by means of a route-finding web map and an interactive distance cartogram. By simulating movement along the principal routes of the Roman road network, the main navigable rivers, and hundreds of sea routes in the Mediterranean, Black Sea and coastal Atlantic, the model reconstructs the duration and financial cost of travel in antiquity. The purpose, development and use of the model and web application are described in considerable detail on the site. |

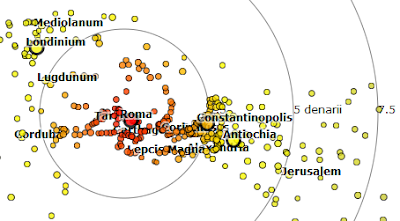

| Interactive distance cartogram in ORBIS, showing ‘grain-distance’ to Rome during June, in denarii |

So, what does ORBIS add to the growing graph of annotations to Pleiades places that Pelagios enables? The initial answer is that for each of 402 places we can tell you which Pleiades places are directly connected to it by a single network segment. In the coming weeks we will be extending both our annotations and the range of API queries, and report that here.

ORBIS places

The ORBIS database includes 751 sites, most of them urban settlements but also including important promontories and mountain passes; 268 of these served as sea ports. ORBIS sites are drawn, with a few adjustments, from the Barrington Atlas of the Greek and Roman World (Talbert 2000). The Barrington map key categorization for size and importance of settlements is maintained, in five rank classes (in ORBIS: increments of 10, 60 to 100). Of the 751 sites, 402 have been identified as identical with Pleiades places, and this is the basis for ORBIS’ connection to the Linked Data cloud.The road network encompasses 84,631 kilometers of roads and desert tracks, and 28,272 kilometers of navigable rivers and canals. This multi-modal terrestrial network is described in the spatial database in terms of 625 distinct road segments and 107 distinct river or canal reaches. As an aside, these segments and reaches are places as well—certainly events have occurred at, on, or near them. Granted the first order of business in our collective effort at large-scale digital historical gazetteers is point locations, principally for settlements. However, named and unnamed paths (e.g. roads, rivers) and potential or actual routes taken upon them (journeys or trajectories), are entities of interest as well—as sites of historical activity and events.

Creating an API

The ORBIS routing application calculates routes between sites, along a multimodal network comprising the terrestrial segments mentioned above, and a 0.1 degree lattice laid over the Mediterranean and Black Seas and North Atlantic Ocean. The route calculation accounts for 8 parameters, each with multiple possible values: start (639), destination (639), month (12), priority (3), network type (5! = 120), river vessel (2), sea vessel (2), land vehicle/mode (9), and price (3). Obviously, results for these billions of distinct combinations can’t be (and needn’t be) pre-calculated and published; a simplified API can deliver results programmatically. So, what to offer in an API? We have begun simply. You can request for example,- A dump of all 402 sites mapped to Pleiades places, in JSON format

http://orbis.stanford.edu/api/sites - A listing of sites one network hop from a given site (using either Pleiades place ID or ORBIS site ID) by road or river, with segment length, e.g

http://orbis.stanford.edu/api/sites/108772 - A route between two places. There are four required parameters: start, destination, month and priority (fastest or shortest route). For example,

http://orbis.stanford.edu/api/route_pl/79299/108772/6/1

requests the shortest path between Aquae Sulis and Augusta Suessionum in June using Pleiades IDs—and returns a JSON object with the estimated trip distance and duration, and a sequence of 7 segments (6 road, 1 coastal sea). For each segment, distance, duration and type are returned as well.

API technical details

Because ORBIS uses PHP for database access, and the syntax we preferred uses slashes (like orbis.stanford.edu/{param1}/{param2}/{param3}, I found the Slim API framework (http://www.slimframework.com/) to be simple and effective. In our case this meant a central index.php referring to separate PHP scripts for each of our three offerings, and a single mod_rewrite statement added to our Apache web server configuration. The API queries are simplified version of existing queries in the ORBIS application. For further details, just contact me.RDF publication

Pelagios required first and foremost a web-published RDF document describing (minimally) mappings between ORBIS sites and Pleiades places, in the form suggested by the Open Annotation Collaboration (openannotation.org ). One such entry is as follows:<rdf:Description rdf:ID="orbis_50012">

<rdf:type rdf:resource="http://www.openannotation.org/ns/Annotation"/>

<oac:hasBody rdf:resource="http://pleiades.stoa.org/places/108751"/>

<oac:hasTarget rdf:resource="http://orbis.stanford.edu/api/site/50012"/>

<dcterms:creator rdf:resource="http://orbis.stanford.edu/"/>

<dcterms:title>The Roman era place, Ara Agrippinensium</dcterms:title>

</rdf:Description>

Generating this document was straightforward for us; the ORBIS sites table has always included a Pleiades ID field, and we had already made 402 mappings there. A SQL query that concatenated the necessary XML markup with site numbers and Pleiades IDs was a simple matter. The file can be freely downloaded, but is presently useful only to Pelagios.

Web landing page

The URIs published in the above file have to resolve to a meaningful location. Navigating to http://orbis.stanford.edu/api/site/50012 loads a page with a small map with markers for that site and the sites directly connected by a single segment to it.A few comments

The ORBIS site does offer the option to export lists of calculated routes as KML for Google mapping, or comma-delimited format (CSV) for more general use. This is good as far as it goes, but we are intrigued at the prospect of ORBIS routes and related data being mashed up in other applications, and see a potential ‘multiplier’ effect in this capability. The ORBIS project will also be a partner collection in Anvil Academic’s Built Upon digital publishing initiative, which may present novel opportunities for linked data applications.Pelagios allows anyone referring to a Pleiades place to discover other annotations for it, now including those of ORBIS. However, given the parametric nature of ORBIS data, what can a simple static URI provide? For the time being, this is only the set of Pleiades places connected to that place by a single terrestrial segment. This seems to me of limited value, although as a Linked Data devotee I understand my job is simply to publish data, enabling applications we can’t necessarily predict. The API we’ve developed to return parameterized routes appears more immediately useful, and in fact we know of one pending application related to paths between coin finds and nearby mints. The annotation provided through Pelagios could be extended, but how? For example, would it be useful to provide, for a given place, distances and durations to all places of a given size or importance? Suggestions are most welcome.

______________________

Talbert, R. J. A. (ed.) 2000. Barrington Atlas of the Greek and Roman World. Princeton.